BCP: export data to azure database

BCP (bulk copy program) is a great way to transfer huge data (millions of rows) to an Azure database. I found that it is very efficient in terms of ease of use and speedy with which the data could be transferred. The bcp utility is a command line utility that ships with Microsoft SQL Server. It bulk copies data between SQL Azure (or SQL Server) and a data file in a user-specified format. The bcp utility that ships with SQL Server 2008 R2 is fully supported by SQL Azure.

Following are the steps to use this utility to export the data to a flat file and then exporting the data to the Azure database:

- Copy data from customer table into a temp table

- select * into [temp_employee] from employee

- Copy data to local file from a table in a local database.

- BCP [server_name].[database_name].[schema_name].[temp_employee] OUT c:\temp\employee.txt -T –c

- Create the temp table in the destination database in the cloud

- Copy data into the azure database.

- BCP [azure_database_name].[schema_name].[employee] IN c:\temp\employee.txt -S servername.database.windows.net; -U <username>@<servername> -P <password>

Following is a sample of the Bulk copy output form the command window for reference. The most interesting aspect to observer is that data transfer rate stood at 5206 rows per second for this particular attempt. I believe that higher transfers could be a possibility depending on the available network bandwidth.

Exporting Data out of SQL Azure

Data can be retrieved in a a huge set from the in SQL Azure by running the following statement at the Windows command prompt:

bcp [database_name].[schema_name].[table_name] out C:\temp\[file_name].txt -c -U username@servername -S tcp:servername.database.windows.net -P password

References:

Predictive Analysis with Monte Carlo Simulation

Introduction

I got interested in Monte Carlo simulation during one of my weekend reading, the more I read the more I appreciated the computational algorithm. This method is used to obtain a result (with a degree of probability) using random input with a knowledge of boundaries. The modern version of the Monte Carlo method was invented in the late 1940s by Stanislaw Ulam, while he was working on nuclear weapons projects at the Los Alamos National Laboratory. It was named by Nicholas Metropolis, after the Monte Carlo Casino, where Ulam’s uncle often gambled. [1] In this post I will briefly cover the basics of Monte Carlo Simulation and then I will detail a scenario where I was able to apply the basic method using MS-Excel for business capacity planning for a fictitious company.

Monte Carlo Simulation

Monte Carlo methods (or Monte Carlo experiments) are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results; typically one runs simulations many times over in order to obtain the distribution of an unknown probabilistic entity. [1] This is a stochastic simulation as there is a degree of randomness involved. The outcome of the simulation most likely will not be same every time. Since the outcome varies with every simulation, the question that begs an answer would be; how many times does the simulation needs to be run to gain confidence in the outcome that is generated. A randomly selected samples will exhibit the same behavior as the entire population from which it is selected is the assumption behind this method. By running the simulation using more number of times the experiment becomes more reproducible. Inferential statistics obtained by running the simulation several thousand times will produce a generalized result.. The range of the results gets narrower as the number of random samples increases. Using all the results of the simulations we can run perform statistical analysis to generate the various outcomes and the corresponding probability of occurrence. Following are the four steps involved in applying this model for analysis of a given situation:

- Identify a mathematical model of the activity or process you want to explore.

- Define the parameters (like mean and standard deviation) for each factor in your model.

- Create random data according to those parameters.

- Simulate and analyze the output of your process.

Capacity Planning

In this example, we have a online business that has been in running for the last few years. This company has been seeing an increase in the number of orders it has been receiving year-over-year. The business would like to perform capacity planning so that they can prepare their operations expansion to handle the transaction load for the next five years. The key information they need at this time is the total number of orders that their business could potentially generate in the fifth year from now. Let us look at how we can use Monte Carlo as a computation model using using Excel to provide this key information.

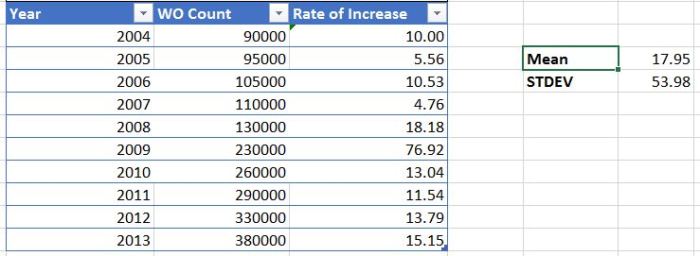

The following table shows the number of orders that the business received every year for the last 10 years. The table also shows the rate of increase in the orders for the same period. We will use this input information to determine mean rate of increase in the orders and also the standard deviation in the year-over-year order count. The mean is computed to be the average of the growth rate for the last 10 years and has been computed to be at 17.95%. The Standard Deviation, which is the the amount of variation or dispersion from the average, was calculated to be 53.98 %.

Let us assume that rate of increase follows a normal distribution. I have used the NORM.INV(RAND(), Mean, SD) to introduce the randomness to the distribution. Now we can apply the same distribution for the next five years to obtain our first possible outcome using the mean and the standard deviation. The order count of 2014 has been computed using the formula p (1 + r) where “p” is the previous years order count and “r” is the computed rate of growth for the year. When we apply the same formula for the next five years we get the following results and these results vary every time we run the simulation with a new randomness.

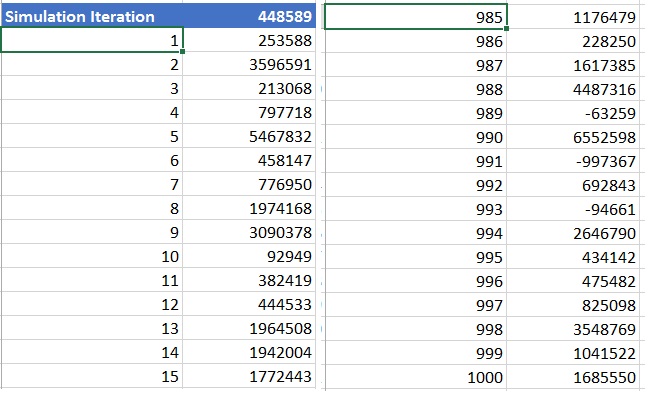

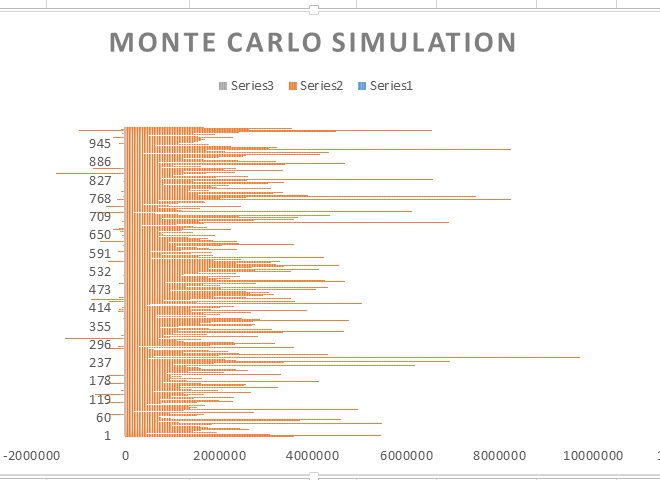

Now we can simulate running the same experiment several hundred or thousand times using the Data Table feature of “What-if” analysis in MS-Excel. We can then compute the mean of all the outcomes from the simulations to arrive at a possible order count which could be the very close to the actual value, if the same rate of growth is sustained for the next five years. In the following two pictures \some results from the simulation of 1000 iterations have been displayed with actual values and a graphical representation of the iterations plotted against the 1000 outcomes.

The above graph shows the results of the 1000 iterations plotted against the computed order count for the year 2018 which is the output of each iteration. Analyzing the output of the simulation we can determine that the mean of all the 1000 outcomes appears to be spread between 1.0 and 1.1 million orders. I had later run the same simulation with 10,000 iterations and I had noticed that the mean outcome spread has tightened further. Depending on the application the number of iterations can be very high only limited by the computational power at-hand. This information could be used by the business to plan for handling future work load. We have seen how Monte Carlo simulation is one of the tools that can be used to predict future values from a known set of uncertainty in input with boundaries. This computation model is used in many fields of Mathematics, Physical Sciences, Engineering, Finance, Project Management, Computational Biology to name a few.

References:

1. Monte Carlo Methods – Wikipedia

2. Monte Carlo Is Not as Difficult as You Think – Eston Martz

SQL Server Storage: Files, Filegroups and RAID

Introduction

In this blog I will briefly write about the files and the filegroups of a SQL Server database, followed by describing the most popular data storage technology called RAID – Redundant Array of Independent Disks, along with covering some internals of this technology and its applicability. Having a understanding of the various database files, RAID, RAID levels will be crucial in building a SQL Server that can scale bigger, restore faster and query quicker.

SQL Server Files and Filegroups

SQL Server database stores data and transactions in separate files. Data associated with tables, indexes, and large object data is stored in data files. The transactions are sequentially stored in a log file. The Data and transactions of a databases can be stored in three types of files.

- Primary data files – Every database has one primary data file. Recommended file name extension is .mdf. This is merely a best practice and your company can have a policy for a unique extension.

- Secondary data files – Secondary data files make up all the data files, other than the primary data file. This file will exist only when it has been defined in the database setup. Recommended file name extension is .ndf.

- Log files – Log files contain the information that is required to recover all transactions in the database. Log files are sequential. There must be at least one log file for each database, although there can be more than one. Recommended file name extension is “.ldf”. The log files are truncated automatically when using the SIMPLE recovery model, but not when using BULK LOGGED or FULL recovery

RAID

The most likely part to fail in a computer or a storage device, because it probably is the only moving part. A hard drive typically is spinning anywhere from 5400 to 15000 rotations per minute (RPM) and stands of chance for failure. RAID is the technology that is the solution to the above problem. RAID stands for Redundant Array of Independent Disks, is a redundant system with multiple hard drive that is used to store your data. Basically it allow you to turn multiple physical hard drives into one logical hard drive. Depending on the type of configuration employed in designing the RAID, failure of one or more drives will not impact your data. This will act as a critical system that can save your data from loss and should be considered for data storage. There are many RAID levels , however three basic types of RAID levels are widely used by companies and they are RAID 0, RAID 1 and RAID 5. RAID 0 is called as disk striping, RAID 1 is called disk mirroring and RAID 5 is called disk striping with parity.

RAID 0 – Disk Striping

Disk striping in RAID 0 combines physical drives into one large logical drive with the maximum capacity being the sum of individual hard disk sizes. For example if you have 2 disks with individual capacity of 1TB in your RAID, you would effectively have 2 TB of usable disk space to store data. Data is distributed across all the drives and where data stripes are distributes evenly across all the physical disks. The size of the data stripe can vary by the design determined by your DBA or the one demanded by your application. Let us assume that you have a stripe size of 16 bits, when a data block of size 64 bits is written on a RAID 0 with 4 disks, bits 1 – 16 are written on disk 1, bits 16 – 32 are written on disk 2, bits 17 – 48 are written on disk 3 and bits 49 – 64 are written on disk 4. Basically this raid level is striping your data across all the disks evenly, thus making writing and reading you data faster. However one big concern with this design is that if a hard disk were to fail, you will not be able to recover the data because you do not have a redundancy to reconstruct all the data.

RAID 1 – Disk Mirroring

Disk mirroring creates identical copies of your data. There would only be two drives involved in this type of RAID. Disk mirroring would make an identical image of the hard drive. In other words the data on one drive is automatically written on to the other hard drive. The primary advantage of this type configuration is in a scenario when one of the hard drive fails, your server is still up and running because you have a complete backup all the data on another hard disk in the RAID. At this point while the sever is still running, you can swap the failed disk with a another hard disk and let the RAID rebuild the mirrored disk. RAID 1 is mainly for liability by introducing redundancy of data. Keep in mind that only 50 % of the total disk space can be used for data storage. For example if you have 2 disks with individual capacity of 1TB in your RAID, you would effectively have 1 TB of usable disk space to store data.

RAID 5 – Disk Striping with Parity

Disk striping with parity can be achieved with atleast 3 hard drives for its simplest configuration. In this case, the stripe 1 will be written to dive 1, stripe 2 will be written to drive 2 and the parity for the data written on drive 1 would be written to the drive 3, followed by the parity for the data written on drive 2 written on drive 1 followed by stripe 4 on drive 2 and stripe 5 on drive 3. It is hard to visualize the last sentence, but you have to understand that this allows for a failure of one hard drive. The parity introduced in this type of RAID will be used to rebuild the failed drive, because the other two drive contain all the data. Another advantage it offers is that you have more disk space when compared with RAID 1 and not as much as RAID 0 though. For example if you have 3 disks with individual capacity of 1TB in your RAID, you would effectively have 2 TB of usable disk space to store data and if you were to have 4 disks on your RAID with 1 TB capacity on each of then, the total usable disk space would be 3 TB . Basically you will be using the the size equivalent of one hard disk for redundancy.

RAID Level 2, 3, 4, 6, 7, 8, 9, 10, ……

All the other RAID levels are a combination of a basic levels 0, 1, 5. For example RAID 10 is a combination of RAID 1 and RAID 0, where your data is mirrored in addition to being striped across the disks. RAID 6 is a combination of RAID 5 and RAID 1, where your data is striped with parity along with it being mirrored for additional redundancy.

Summary

Redundant array of independent disks is a system that allows for configuring hard disks to store data efficiently and also provide fault tolerance. Raid level 0 can ideally be used for fast read and write capability with the ability to combine several smaller disks into one large logical drive. Caution should be exercised in maintaining a backup scheme for your data as there is no redundancy provided by this level. RAID level 1 is purely a play on providing redundancy for your data. RAID level 5 provides improved performance with writing and reading data in addition to providing some redundancy. RAID 1 and 5 allows for hot swappable disks, meaning to say, the failed drives can be replaced and rebuild while the server is still running. RAID is the right tool for business because it delivers flexibility and scalability so that you can create and manage enterprise data storage systems in the shortest possible time.

References:

1. Files and Filegroups Architecture

2. RAID Levels and SQL Server

Dependency Injection: Unity Application Block

In this post I will show you how to use Microsoft Unit Application Block to achieve Dependency Injection. We will see the two methods of configuring the IOC container, firstly the “.config” way and then the “inline” method. Let us take a look at the following code snippet and see how this code can be improved.

class Program

{

static void Main(string[] args)

{

Service svc = new Service();

svc.Print();

}

}

public class Service

{

public void Print()

{

Employee empl = new Employee();

empl.PrintEmployeeTitle("Test Employee");

}

}

public class Employee

{

public void PrintEmployeeTitle(string name)

{

Console.WriteLine("Employee Name: {0}, Title:{1}", name, "Some Title");

}

}

What does this code do?

The above code snippet if from a console application, where we are creating an instance of the Service class and are calling the Print method. The Print method in turn instantiates the Employee class and then calls the PrintEmployeeTitle method of the Employee class to print the employee name and title. The PrintEmployeeTitle method writes the name and title of an employee to the console.

What is wrong?

Nothing. While there is nothing wrong with this code, if we closely observer, we can notice that the Employee class instance could not exist without an instance of the Service class. Basically they both are tightly coupled, meaning to say we can only have one implementation of the Employee class to be consumed by the Service class at any given instance of time.

What if we have a scenario when we want to test more than one implementation of the Employee class or if the Employee class implementation is a work-in-progress? Here is where Dependency Injection design pattern comes to our rescue. I hope I have set some context before I explaining about DI and its implementation.

Solving the problem

Decoupling the Employee class life cycle management from the Service class is the primary objective. The advantage of decoupling the Employee class are that 1) we will be in a position to provide multiple implementations to the Employee class 2) be able to select the kind of implementation that is suitable for our purpose and 3) manage the life cycle of the Employee class. We define an interface IEmployee with one method PrintEmployeeTitle and define two implementations for this demo purpose. The first implementation is what we already had above and the second is a MockEmployee Class.

public class Employee : IEmployee

{

public void PrintEmployeeTitle(string name)

{

Console.WriteLine("Employee Name: {0}, Title:{1}", name, "Some Title");

}

}

public class MockEmployee : IEmployee

{

public void PrintEmployeeTitle(string name)

{

Console.WriteLine("Employee Name: {0}, Title:{1}", name, "Some MOCK Title");

}

}

public interface IEmployee

{

void PrintEmployeeTitle(string name);

}

Let us see how we can delegate the Employee class instantiation to the client (Class:Program; Method: Main) and then inject the Employee object into the Service object.

Dependency Injection

Service class has a dependency on the Employee class and our objective here is inject this dependency into the Service class from the Client. Dependency injection is a software design pattern that allows a choice of component to be made at run-time rather than compile time [2]. One way to achieve this is via passing the Employee object reference to the Service class constructor like in the code below:

class Program

{

static void Main(string[] args)

{

Employee empl = new Employee();

Service svc = new Service(empl);

svc.Print();

}

}

public class Service

{

private IEmployee empl;

public Service(IEmployee empl)

{

this.empl = empl;

}

public void Print()

{

empl.PrintEmployeeTitle("Test Employee");

}

}

Another way of injecting the Employee reference into the Service object is via setting the instance of Employee class to an I IEmployee property of the Service class.

class Program

{

static void Main(string[] args)

{

Employee empl = new Employee();

Service svc = new Service();

svc.empl = empl;

svc.Print();

}

}

public class Service

{

IEmployee _empl;

public IEmployee empl

{

set

{

this._empl = value;

}

}

public void Print()

{

_empl.PrintEmployeeTitle("Test Employee");

}

}

We have so far been able to decouple the Service class and the Employee class, however we still have to create an instance of the Employee class to implement dependency injection. Any change in to the Employee class creation mechanism with require a code change and also a code recompile. This is the point where IOC framework comes handy in automating the creation and injection of the dependency via just one configuration.

Inversion of control

In software engineering, Inversion of Control (IoC) is an object-oriented programming practice where the object coupling is bound at run time by an assembler object and is typically not known at compile time using static analysis [1]. Like discussed earlier we are going to transfer the control of creating the Employee object to the IOC framework rather than keeping it with the Client, mean to say we are performing an “Inversion of Control”.

The configured entities are loaded into an IOC container at run-time and will be injected into the appropriate classes. We can implement .Net Dependency Inject using any one of the following IOC containers:

Microsoft Unity IoC Container

Now let us look at the two ways of configuring and implementing DI using Microsoft Unity Container. Before we can use the Unity container you have to download and install the Microsoft Unit Application Block from the Microsoft Patterns and Practices website. The dll’s necessary for this implementation can be found under the “Drive:/Program Files/Microsoft Unity Application Block x.0/Bin/” folder and should be added as references to your project.

Please accept my apologies for a very crude/rude representation of the client (Class: Program, Method: Main) being able to select one of the three implementations of the Employee class use Dependency Injection via an IOC container.

Application Configuration File

When we have to implement DI using the Application Config file, we have to define Unity block section, define a container and register that namespace and the Class that is getting DI’ed.

<configSections> <section name="unity" type="Microsoft.Practices.Unity.Configuration.UnityConfigurationSection,Microsoft.Practices.Unity.Configuration" /> </configSections> <unity xmlns="http://schemas.microsoft.com/practices/2010/unity"> <alias alias="singleton" type="Microsoft.Practices.Unity.ContainerControlledLifetimeManager, Microsoft.Practices.Unity" /> <container name="TestService"> <register type="UnityFrameworkDemo.IEmployee, UnityFrameworkDemo" mapTo="UnityFrameworkDemo.MockEmployee, UnityFrameworkDemo"> <lifetime type="singleton" /> </register> </container> </unity>

I had to also add a reference of the System.Configuration namespace to the project. Once we have this configuration all set, we have to update the client to 1) load the container that we defined in the configuration and 2) generate the Service class based on the configuration that we have defined:

class Program

{

static void Main(string[] args)

{

try

{

container.LoadConfiguration("TestService");

svc = container.Resolve();

if (svc != null)

svc.Print();

else

Console.WriteLine("Could not load the Service Class");

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

}

public class Service

{

IEmployee _empl;

public Service(IEmployee empl)

{

this._empl = empl;

}

public void Print()

{

_empl.PrintEmployeeTitle("Test Employee");

}

}

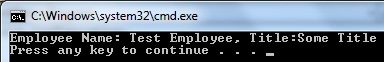

You would also have noticed that I have added a constructor that accepts parameter of IEmployee type to the Service class. The IOC framework will use this constructor to generate an instance of the Service class and will also pass a reference of the Employee class into the Service instance. Following is the output of using the default Employee implementation:

Now switching to the Mock Employee implementation from the default implementaion is as simple as updating the register element of the configuration with the MockEmployee class name and then we get the following output

Inline

Lastly let us look at how we can configure an implementation inside the client instead of in the application config file. Basically you have to register the Interface and the implementation with the container in the client and your client is ready to make a call to the Service instance methods like shown in the code below:

container.RegisterType();

svc = container.Resolve();

if (svc != null)

svc.Print();

Unity framework basically provides a fantastic approach to decouple the application layer code. You can define several containers and register several classes with the container and be able to enjoy the flexibility by implementing Dependency Injection using the Unity IOC container.

Happy Fourth of July !

References:

1. Inversion of Control – IOC – Wikipedia

2. Dependency Injection – Wikipedia